Goa is abuzz with excitement as vintage bike and car owners, users, collectors and fans are decking […]

THREATS OF AI IN 2026

Dec 27- Jan 02, 2026, In the News December 26, 2025Artificial Intelligence (AI) brings enormous benefits, but when deployed without care, regulation, and accountability, it can cause serious social, economic, political, and ethical harm. Below is a clear, practical overview.

- Bias, Discrimination & Injustice

• AI systems learn from historical data, which often contains embedded human prejudice.

• Results include discrimination in hiring, lending, policing, healthcare, and welfare.

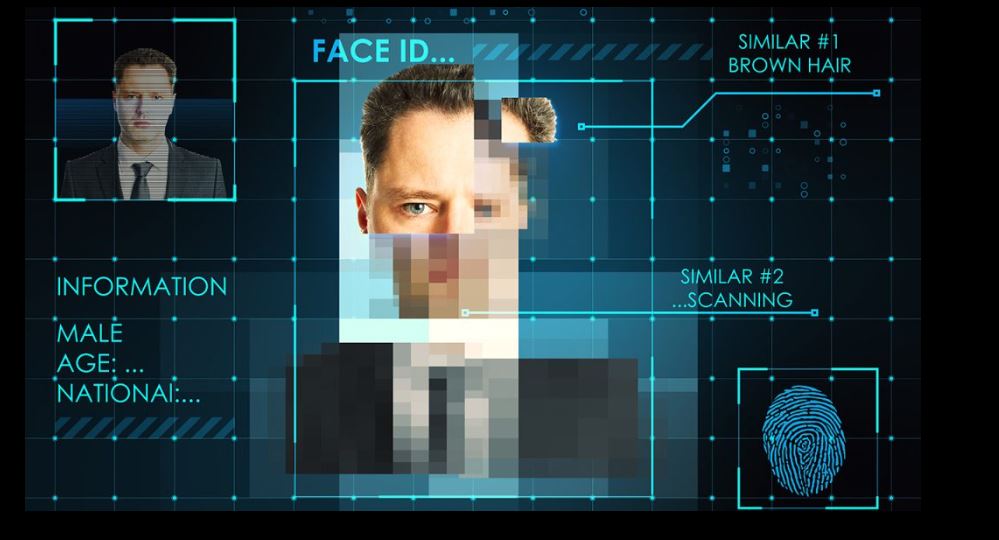

• Facial-recognition tools have shown higher error rates for women and darker-skinned people, leading to wrongful suspicion or arrests.

Harm: Systemic injustice becomes automated and scaled.

- Misinformation, Deepfakes & Propaganda

• AI can generate fake videos, voices, images, and news that look real.

• Deepfakes undermine trust in elections, courts, journalism, and public discourse.

• Citizens can no longer easily distinguish truth from fabrication.

Harm: Democracy, public trust, and social cohesion erode.

- Surveillance & Loss of Privacy

• Governments and corporations use AI for mass surveillance, facial recognition, and behavioral tracking.

• Often deployed without consent or transparency.

• Can be used to suppress dissent or target minorities.

Harm: Chilling effect on freedom, speech, and civil liberties.

- Job Displacement & Economic Inequality

• AI automates tasks across journalism, transport, customer service, manufacturing, and even medicine.

• Job losses affect low- and middle-income workers most.

• Wealth concentrates among tech owners and data controllers.

Harm: Growing inequality and social instability.

- Opaque Decision-Making (“Black Box” Problem)

• Many AI systems cannot explain why they made a decision.

• A person may be denied a loan, parole, or treatment without a clear reason or appeal.

Harm: Accountability disappears; justice becomes unchallengeable.

- Weaponization & Security Risks

• AI is used in autonomous weapons, cyberattacks, and surveillance warfare.

• Machines may make life-and-death decisions without human judgment.

Harm: Escalation of conflict and loss of human moral control.

- Cultural & Intellectual Harm

• AI-generated content can:

o Devalue human creativity

o Plagiarize writers, artists, and journalists

o Flood public space with low-quality or manipulated material

Harm: Human authorship, originality, and credibility are weakened.

- Health & Psychological Impact

• Overreliance on AI can reduce critical thinking.

• Chatbots may give incorrect medical or emotional advice.

• Algorithmic feeds amplify addiction, anxiety, and polarization.

Harm: Mental health deterioration and dependency.

The Core Problem

AI itself is not evil — harm arises from who controls it, how it is trained, and whether it is regulated.

What Can Reduce Harm

• Strong laws on data protection, transparency, and accountability

• Mandatory human oversight in critical decisions

• Public-interest journalism and audits of AI systems

• Ethical standards rooted in human rights, not profit alone

If you wish, I can:

• Tailor this as an op-ed for Goan Observer

• Focus on AI harm in journalism, policing, or healthcare

• Create a print-ready explainer or illustrated poster

• Connect it to Goa-specific governance and surveillance issues

Just tell me how you’d like to use it.

When Artificial Intelligence Becomes Artificial Injustice

An Op-Ed

Artificial Intelligence is being sold to the public as a neutral, almost divine force — efficient, objective, tireless. Governments celebrate it, corporations worship it, and citizens are told it is inevitable. That narrative is dangerously incomplete.

AI is not an independent intelligence. It is a mirror of power — reflecting the prejudices, priorities, and profits of those who design and deploy it. When unchecked, AI does not merely assist society; it amplifies inequality, erodes democracy, and weakens human agency.

Bias at Machine Speed

AI systems are trained on historical data — and history is deeply unequal. When prejudice enters an algorithm, it becomes systemic, invisible, and fast.

Whether in recruitment, policing, credit scoring, or welfare delivery, biased AI decisions are often treated as “objective” simply because a machine produced them. The result is automation of injustice with no human face to question and no authority clearly accountable.

Truth in the Age of Deepfakes

AI-generated images, voices, and videos have shattered the idea of verifiable truth. A politician can plausibly deny a real recording. A fake speech can trigger violence before fact-checkers even wake up.

Democracy depends on a shared sense of reality. AI-driven misinformation is not just a technological problem — it is a constitutional threat.

Surveillance Without Consent

AI-powered facial recognition and behavioural tracking are spreading faster than public debate or legal safeguards. Citizens are monitored not because they are suspects, but because they are data.

The danger is not science fiction. It is the quiet normalisation of surveillance — where fear replaces freedom and dissent becomes a data point.

Jobs Lost, Wealth Concentrated

AI is replacing not only manual labour but cognitive work — journalists, translators, designers, call-centre staff. Productivity rises, but benefits flow upward.

Without social safeguards, AI risks creating an economy where efficiency grows while livelihoods disappear.

The Black Box of Power

Perhaps the most disturbing aspect of AI is opacity. People are denied loans, insurance, parole, or medical priority without understanding why. When decisions cannot be explained, they cannot be challenged.

Justice without explanation is not justice. It is algorithmic authority masquerading as progress.

The Real Question

The issue is not whether AI is good or bad. The real question is:

Who controls it? Who audits it? Who benefits — and who pays the price?

Technology without ethics becomes tyranny by design.

A Way Forward

AI must serve humanity, not replace human judgment. This requires:

• Strong transparency and accountability laws

• Mandatory human oversight in critical decisions

• Protection of data as a civic right, not a commodity

• Journalism that interrogates algorithms, not glorifies them

Artificial Intelligence can assist human progress — but only if human values remain in command.

If we surrender ethics at the altar of efficiency, we may gain smarter machines and lose a just society.

If you want, I can:

• Tighten this to 800 words for print

• Localise it to Goa or Indian governance

• Add a hard-hitting headline + pull quotes

• Prepare a print-ready Goan Observer layout